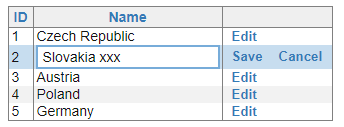

Yesterday, we got a question from one of DotVVM customers. He was using the Business Pack GridView with the inline editing feature and asked us how to allow the user to save changes in the row by pressing Enter.

Default button in forms

DotVVM itself doesn’t include any specific functionality to handle the keyboard actions – we rely on default behavior in HTML.

The situation is quite easy to solve when you create a simple form – to make the button to respond to the Enter key, you need to make it a “submit” button, and it needs to be in a <form> element.

<form>

<div>

<label>User Name</label>

<dot:TextBox Text="{value: UserName}" />

</div>

<div>

<label>Password</label>

<dot:TextBox Text="{value: Password}" Type="Password" />

</div>

<div>

<dot:Button Text="Sign In" Click="{command: SignIn()}"

IsSubmitButton="true" />

</div>

</form>

The only thing you need to do is to set IsSubmitButton to true so the button will add type=”submit”. And of course, the form fields and the button must be inside the <form> element.

Modal dialogs and GridView inline editing

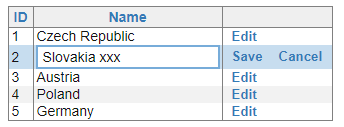

A little bit interesting situation occurs in modal dialogs and GridView control where you want to allow the users to edit a single row and save the changes on Enter.

The ModalDialog control has the ContentTemplate and FooterTemplate child elements, so you will have the form fields in one template and the save button in the other. You would need to put the entire modal dialog in a <form> element to make it work, and since forms in HTML cannot be nested, it might be an issue if you have more complicated scenarios.

Using the default button while editing data in GridView is completely impossible to do because the table row is <tr> and you cannot place <form> inside. You would have to put the entire table in the <form> element which is not nice and there might be multiple submit buttons if you want to allow the user to edit any row.

Moreover, there is no standard way to react to the Escape key if the user wants to cancel the edit.

Extending DotVVM

It might be easy enough to google for a piece of jQuery code which will find the <input> elements in the form, catch the Enter press and click the correct button.

However, it is not difficult to write a generic solution for this problem and make it reusable. Basically, we need to define a container in which the Enter and Escape keys will be redirected to a particular “default” or “cancel” button. Something like the <form> element does, but even if it’s not the <form>.

In DotVVM, you can declare attached properties that can be added to any HTML element or DotVVM control. It is the same concept as attached properties in WPF or other XAML-based frameworks.

The attached property in DotVVM can render additional HTML attributes or Knockout data-bindings to the element or control on which it is applied.

What I want to achieve is something like this:

<tr data-bind="dotvvm-formhelpers-defaultbuttoncontainer: true">

...

<td>

<dot:Button Text="Save" ...

data-dotvvm-formhelpers-defaultbutton="true" />

<dot:Button Text="Cancel" ...

data-dotvvm-formhelpers-cancelbutton="true" />

</td>

</tr>

The <tr> element specifies my custom Knockout binding handler which catches all Enter and Escape key presses from its children. If the Enter is pressed inside the <tr> element, this handler will find the control marked with data-dotvvm-formhelpers-defaultbutton attribute and clicks on it. A similar thing will be done for the Escape key, only the data attribute is different.

I didn’t want to use the button IDs of as there will be multiple rows in the grid and I would need to generate unique IDs for the buttons. Marking the control with the data attribute looks nicer to me.

I am setting all attributes and binding handlers to true. Actually, their values are not important at all because they are not used, but I needed something to be there.

The binding handler should also stop the propagation of the event because the grid may be in a modal dialog which might want to use this concept too and we don’t want to submit two things with one press of Enter.

So first, let’s declare the attached properties so we can use them in DotVVM markup:

[ContainsDotvvmProperties]

public class FormHelpers

{

[AttachedProperty(typeof(bool))]

[MarkupOptions(AllowBinding = false)]

public static readonly DotvvmProperty DefaultButtonContainerProperty

= DelegateActionProperty<bool>.Register<FormHelpers>("DefaultButtonContainer", AddDefaultButtonContainer);

[AttachedProperty(typeof(bool))]

[MarkupOptions(AllowBinding = false)]

public static readonly DotvvmProperty IsDefaultButtonProperty

= DelegateActionProperty<bool>.Register<FormHelpers>("IsDefaultButton", AddIsDefaultButton);

[AttachedProperty(typeof(bool))]

[MarkupOptions(AllowBinding = false)]

public static readonly DotvvmProperty IsCancelButtonProperty

= DelegateActionProperty<bool>.Register<FormHelpers>("IsCancelButton", AddIsCancelButton);

private static void AddDefaultButtonContainer(IHtmlWriter writer, IDotvvmRequestContext context, DotvvmProperty property, DotvvmControl control)

{

writer.AddKnockoutDataBind("dotvvm-formhelpers-defaultbuttoncontainer", "true");

}

private static void AddIsDefaultButton(IHtmlWriter writer, IDotvvmRequestContext context, DotvvmProperty property, DotvvmControl control)

{

writer.AddAttribute("data-dotvvm-formhelpers-defaultbutton", "true");

}

private static void AddIsCancelButton(IHtmlWriter writer, IDotvvmRequestContext context, DotvvmProperty property, DotvvmControl control)

{

writer.AddAttribute("data-dotvvm-formhelpers-cancelbutton", "true");

}

}

As you can see, I have added the FormHelpers class in the project. It contains three attached properties:

- DefaultButtonContainer is used to mark the element in which the keys should be handled.

- IsDefaultButton is used to mark the default button inside the container – it will respond to the Enter key.

- IsCancelButton is used to mark the cancel button inside the container – it will respond to the Escape key.

The DelegateActionProperty.Register allows to create a DotVVM property that calls a method before the element is rendered. This is the right place for us to render the Knockout data-bind expression for the first property, and the data attributes for the other properties.

Notice that the class is marked with the ContainsDotvvmProperties attribute. This is necessary for DotVVM to be able to discover these properties when the application starts.

The binding handler

All the magic happens inside the Knockout binding handler. It is a very powerful tool for extensibility and if you learn how to create your own binding handlers, you’ll get to the next level of interactivity. And thanks to DotVVM and its concept of resources and controls, it is very easy to bundle these binding handlers in a DLL and reuse them in multiple projects.

ko.bindingHandlers["dotvvm-formhelpers-defaultbuttoncontainer"] = {

init: function (element, valueAccessor, allBindings, viewModel, bindingContext) {

$(element).keyup(function (e) {

var buttons = [];

if (e.which === 13) {

buttons = $(element).find("*[data-dotvvm-formhelpers-defaultbutton=true]");

} else if (e.which === 27) {

buttons = $(element).find("*[data-dotvvm-formhelpers-cancelbutton=true]");

}

if (buttons.length > 0) {

$(e.target).blur();

$(buttons[0]).click();

e.stopPropagation();

}

});

},

update: function (element, valueAccessor, allBindings, viewModel, bindingContext) {

}

};

The binding handler is just an object with init and update functions. The init is called whenever an element with data-bind=”dotvvm-formhelpers-defaultbuttoncontainer: …” appears in the page. It doesn’t matter if the element is there from the beginning or if it appears later (for example when a new row is added to the Grid). It is called in all these cases.

The update function is called whenever the value of the expression in the data-bind attribute changes. Since we always have true here, we don’t need anything in the update function.

As you can see, I just subscribed to the keyup event on the element which gets this binding handler. This event bubbles from the control which received the key press to the root of the document. If the key code is 13 (Enter) or 27 (Escape), I look for the button with the correct data attribute.

If there is such a button (or possibly more of them), I click on it and stop propagation of the event.

I need to call blur before clicking the button because when the user changes the value of a text field, it is written in the viewmodel when the control loses focus. I need to trigger this event manually before the click event is triggered on the button. Otherwise, the new value wouldn’t be stored in the viewmodel at the right time.

The last thing is to register this script file so DotVVM knows about it. Place this code in DotvvmStartup.cs:

config.Resources.Register("FormHelpers", new ScriptResource()

{

Location = new UrlResourceLocation("~/FormHelpers.js"),

Dependencies = new [] { "knockout", "jquery" }

});

We also must make sure that the script is present in the page where we use the attached properties. The easiest way is to add the following control in the page (or in the master page if you use this often).

<dot:RequiredResource Name="FormHelpers" />

Currently, we don’t have any mechanism to tell the property to request this resource automatically, so you need to include the resource manually.

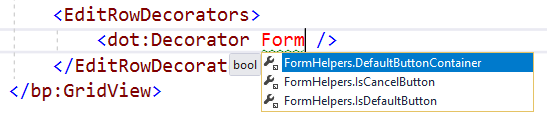

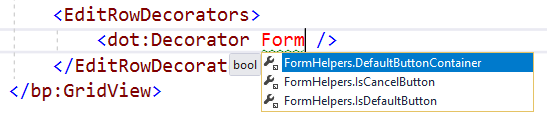

Using the attached properties

Now the markup can look like this:

<tr FormHelpers.DefaultButtonContainer>

...

<td>

<dot:Button Text="Save" ...

FormHelpers.IsDefaultButton />

<dot:Button Text="Cancel" ...

FormHelpers.IsCancelButton />

</td>

</tr>

The nice thing about the true values of these properties is that you don’t need to write =”true” in the markup, you can just specify the property name.

But wait, how do I apply the attached property to the GridView table row? The <tr> element is rendered by the control itself, it is not in my code.

Luckily, there is the RowDecorators property which allows to “decorate” the <tr> element rendered by the control. And there is also EditRowDecorators which is used for the rows which are in the inline editing mode.

<bp:GridView DataSource="{value: Countries}" InlineEditing="true">

<Columns>

<bp:GridViewTextColumn ValueBinding="{value: Id}" HeaderText="ID" IsEditable="false" />

<bp:GridViewTextColumn ValueBinding="{value: Name}" HeaderText="Name" />

<bp:GridViewTemplateColumn>

<ContentTemplate>

<dot:Button Text="Edit" Click="{command: _root.Edit(_this)}" />

</ContentTemplate>

<EditTemplate>

<dot:Button Text="Save" Click="{command: _root.Save(_this)}" FormHelpers.IsDefaultButton />

<dot:Button Text="Cancel" Click="{command: _root.CancelEdit(_this)}" FormHelpers.IsCancelButton />

</EditTemplate>

</bp:GridViewTemplateColumn>

</Columns>

<EditRowDecorators>

<dot:Decorator FormHelpers.DefaultButtonContainer />

</EditRowDecorators>

</bp:GridView>

As you can see, I have used <dot:Decorator> to apply the FormHelpers.DefaultButtonContainer property to the rows.

The buttons are rendered in the EditTemplate and I have just applied the properties to them.

Now the user can change the value and use Enter and Escape keys to click Save or Cancel button.

Conclusion

Knockout binding handlers are very powerful and can help to improve the user experience. In fact, most of the DotVVM controls are just a cleverly written binding handlers.

Thanks to the attached properties and strong-typing nature of DotVVM, you also have IntelliSense in the editor, and you can bundle these pieces of infrastructure in your custom DLLs or NuGet packages and reuse them in multiple projects.

The FormHelpers class may be included as part of future releases of DotVVM Business Pack since this is quite common user requirement.

If you have any questions, feel free to ask on our Gitter chat.