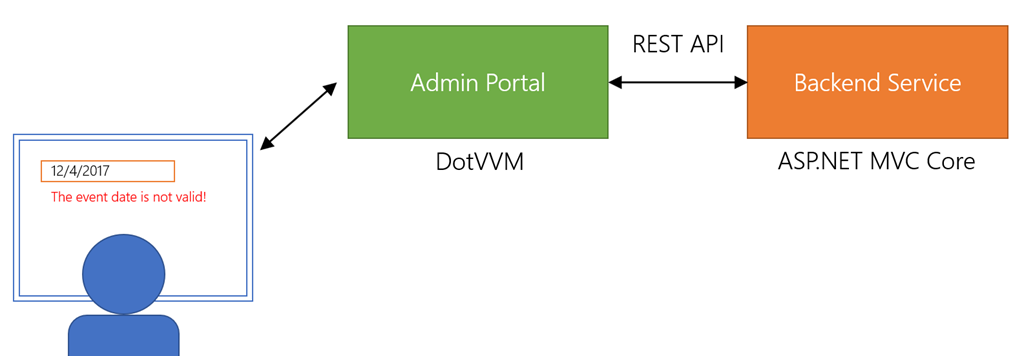

In my last live coding session, I have a web application built with DotVVM which doesn’t connect to a database directly. It consumes a REST API instead, which is quite common approach in microservices applications.

The validation logic should be definitely implemented in the backend service. The REST API can be invoked from other microservices and we certainly don’t want to duplicate the logic on multiple places. In addition to that, the validation rules might require some data which would not be available in the admin portal (uniqueness of the user’s e-mail address for example).

Basically, the backend service needs to report the validation errors to the admin portal and the admin portal needs to report the error in the browser so the text field can be highlighted.

The solution is quite easy as both DotVVM and ASP.NET MVC Core use the same model validation mechanisms.

Implementing the Validation Logic

In my case, the API controller accepts and returns the following object (some properties were stripped out). I have used the standard validation attributes (Required) for the simple validation rules and implemented the IValidatableObject interface to provide enhanced validation logic for dependencies between individual fields.

public class EventDTO : IValidatableObject

{

public string Id { get; set; }

[Required]

public string Title { get; set; }

public string Description { get; set; }

public List<EventDateDTO> Dates { get; set; } = new List<EventDateDTO>();

...

public DateTime RegistrationBeginDate { get; set; }

public DateTime RegistrationEndDate { get; set; }

[Range(1, int.MaxValue)]

public int MaxAttendeeCount { get; set; }

public IEnumerable<ValidationResult> Validate(ValidationContext validationContext)

{

if (!Dates.Any())

{

yield return new ValidationResult("The event must specify at least one date!");

}

if (RegistrationBeginDate >= RegistrationEndDate)

{

yield return new ValidationResult("The date must be greater than registration begin date!", new [] { nameof(RegistrationEndDate) });

}

for (var i = 0; i < Dates.Count; i++)

{

if (Dates[i].BeginDate < RegistrationEndDate)

{

yield return new ValidationResult("The date must be greater than registration end date!", new[] { nameof(Dates) + "[" + i + "]." + nameof(EventDateDTO.BeginDate) });

}

if (Dates[i].BeginDate >= Dates[i].EndDate)

{

yield return new ValidationResult("The date must be greater than begin date!", new[] { nameof(Dates) + "[" + i + "]." + nameof(EventDateDTO.EndDate) });

}

}

...

}

}Notice that when I validate the child objects, the property path is composed like this: Dates[0].BeginDate.

Reporting the validation errors on the API side

When the client posts an invalid object, I want the API to respond with HTTP 400 Bad Request and provide the list of errors. In ASP.NET MVC Core, there is the ModelState object which can be returned from the API. It is serialized as a map of property paths and lists of errors for the particular property.

I have implemented a simple action filter which verifies that the model is valid. If not, it produces the HTTP 400 response.

public class ModelValidationFilterAttribute : ActionFilterAttribute

{

public override void OnActionExecuting(ActionExecutingContext context)

{

if (!context.ModelState.IsValid)

{

context.Result = new BadRequestObjectResult(context.ModelState);

}

else

{

base.OnActionExecuting(context);

}

}

}You can apply this attribute on a specific action, or as a global filter. If something is wrong, I am getting something like this from the API:

{

"Title": [

"The event title is required!"

],

"RegistrationEndDate": [

"The date must be greater than registration begin date!"

],

"Dates[0].BeginDate": [

"The date must be greater than registration end date!"

]

}

Consuming the API from the DotVVM app

I am using Swashbuckle.AspNetCore library to expose the Swagger metadata of my REST API In my DotVVM application. I am also using NSwag to generate the client proxy classes.

This tooling can save quite a lot of time and is very helpful in larger projects. If you make any change in your API and regenerate the Swagger client, you will get a compile errors on all places that needs to be adjusted, which is great.

On the other hand, I am still missing a lot of features in Swagger pipeline, for example support for generic types. Also, even that NSwag provides a lot of extensibility points, sometimes I had to use nasty hacks or regex matching to make it generate the things I really needed.

Swagger generates the ApiClient class with async methods corresponding to the individual controller actions. I can call these methods from my DotVVM viewmodels.

public async Task Save()

{

await client.ApiEventsPostAsync(Item);

Context.RedirectToRoute("EventList");

}Now, whenever I get HTTP 400 from the API, I need to read the list of validation errors, fill the DotVVM ModelState with them and report them to the client.

Reporting the errors from DotVVM

I have created a class called ValidationHelper with a Call method. It accepts a delegate which is called. If it returns a SwaggerException with the status code of 400, we will handle the exception. Actually, there are two overloads - one for void methods, one for methods returning some result.

public static class ValidationHelper

{

public static async Task Call(IDotvvmRequestContext context, Func<Task> apiCall)

{

try

{

await apiCall();

}

catch (SwaggerException ex) when (ex.StatusCode == "400")

{

HandleValidation(context, ex);

}

}

public static async Task<T> Call<T>(IDotvvmRequestContext context, Func<Task<T>> apiCall)

{

try

{

return await apiCall();

}

catch (SwaggerException ex) when (ex.StatusCode == "400")

{

HandleValidation(context, ex);

return default(T);

}

}

...

}The most interesting is of course the HandleValidation method. It parses the response and puts the errors in the DotVVM model state:

private static void HandleValidation(IDotvvmRequestContext context, SwaggerException ex)

{

var invalidProperties = JsonConvert.DeserializeObject<Dictionary<string, string[]>>(ex.Response);

foreach (var property in invalidProperties)

{

foreach (var error in property.Value)

{

context.ModelState.Errors.Add(new ViewModelValidationError() { PropertyPath = ConvertPropertyName(property.Key), ErrorMessage = error });

}

}

context.FailOnInvalidModelState();

}And finally, there is one little caveat - DotVVM has a different format of property paths than ASP.NET MVC uses, because the viewmodel on the client side is wrapped in Knockout observables. Instead of using Dates[0].BeginDate, we need to use Dates()[0]().BeginDate, because all getters in Knockout are just function calls.

This hack won’t be necessary in the future versions of DotVVM as we plan to implement a mechanism that will detect and fix this automatically.

private static string ConvertPropertyName(string property)

{

var sb = new StringBuilder();

for (int i = 0; i < property.Length; i++)

{

if (property[i] == '.')

{

sb.Append("().");

}

else if (property[i] == '[')

{

sb.Append("()[");

}

else

{

sb.Append(property[i]);

}

}

return sb.ToString();

}

Using the validation helper

There are two ways how to use the API we have just created. Either you can wrap your API call in the ValidationHelper.Call method. Alternatively, you can implement a DotVVM exception filter that will call the HandleException method directly.

public async Task Save()

{

await ValidationHelper.Call(Context, async () =>

{

await client.ApiEventsPostAsync(Item);

});

Context.RedirectToRoute("EventList");

}

In the user interface, the validation looks the same as always - you mark an element with Validator.Value="{value: property}" and configure the behavior of validation on the element itself, or on any of its ancestors, for example Validation.InvalidCssClass="has-error" to decorate the elements with the has-error class when the property is not valid.

The important thing is that you need to set the save button's Validation.Target to the object you are passing to the API, so the property paths would lead to corresponding properties on the validation target.

You can look at the ErrorDetail page and its viewmodel in the OpenEvents project repository to see how the things work.

Next Steps

Currently, this solution validates only on the backend part, not in the DotVVM application and of course not in the browser. To make the client-side validation work, the DotVVM application would have to see the validation attributes on the EventDetailDTO object. Right now, the object is generated using Swagger, so it doesn’t have these attributes.

The NSwag generator could be configured not to generate the DTOs, so we would be able to have the DTOs in a class library project that would be referenced by both admin portal and backend app. This would allow to do the same validation in the admin portal and because DotVVM would see the validation attributes, it could enforce some validation rules in the browser.