In the previous blog post, I was writing about the problems of legacy ASP.NET Web Forms applications. It is often not possible to rewrite them from scratch, as new features should be introduced every month, and I’d like to dig into one of the ways of modernizing these applications.

Parts of the series

In this part, I will write about how to replace Forms Authentication with OWIN Security middlewares. I needed to do this in one of my older Web Forms apps recently, because it was using Microsoft.WebPages.OAuth library to authenticate users using Facebook, Twitter and Google. This library was part of ASP.NET Web Pages and is very old, so it doesn’t support the current version of OAuth protocol.

OWIN, which was a new infrastructure for ASP.NET applications on .NET Framework, a predecessor of ASP.NET Core, supports various authentication middlewares, and besides Cookie Security Middleware which can replace Forms Authentication, there are also middlewares for all commonly used social networks and other authentication scenarios.

Forms Authentication

I have created a sample ASP.NET Web Forms application (it’s in ModernizingWebFormsSample.Auth folder) with a few pages and Forms Authentication.

It is using the built-in membership provider to store information about users. The database should be created automatically and it should reside in App_Data folder.

In Global.asax, there is a code which creates a default user account (e-mail: [email protected], password: Pa$$w0rd) when the application is launched.

The Login.aspx page contains a login form made by the <asp:Login> control. This control uses the membership provider to validate the user credentials (by calling Membership.ValidateUser) and then it calls FormsAuthentication.SetAuthCookie to issue a cookie with an authentication token.

If you don’t want to use <asp:Login> control, you can always build the form by yourself and issue the authentication cookie manually by calling the methods mentioned above.

The concepts

If you are not familiar with how membership providers and identity in ASP.NET works, here is a brief explanation.

Almost every web application uses some concept of user accounts, often with different permissions and access levels.

The information about the users needs to be stored somewhere. Sometimes, the users are stored in a SQL database, sometimes, they are retrieved from Active Directory, and there are many other options.

Membership provider is an abstract mechanism that provides a unified API for the application no matter how and where exactly the user info is stored. The membership provider exposes methods for creating, editing and deleting users, validating, changing and resetting their passwords, and so on.

ASP.NET comes with a built-in membership provider, which stores user information in a SQL database which is located in App_Data folder (it can be changed in web.config).

You can implement your own membership provider to be able to store users in your own SQL database, or any other place. You just need to implement several methods like CreateUser, ValidateUser etc.

All ASP.NET controls that work with users (<asp:Login>, <asp:ChangePassword> etc.) work thanks to the concept of membership providers. If you haven’t heard about membership providers before, you are probably using the default one.

You should consider replacing membership providers by ASP.NET Identity, which provides much more options and features, but that’s another story - I will address it in some of the next parts of this series.

The authentication in ASP.NET can work in multiple modes. Most Web Forms applications are using Forms Authentication, which uses an authentication cookie. You can set the authentication mode in web.config, using the system.web/authentication element.

The authentication and membership providers are decoupled, so you should be able to use any authentication mode with any membership provider (or other solution, like ASP.NET Identity I have already mentioned).

OWIN

ASP.NET was always tightly integrated with IIS. It was quite difficult to run .NET web apps outside IIS. The reason for OWIN was to provide a modular and extensible HTTP server pipeline that would be able to run without IIS dependencies. This allows e.g. self-hosting ASP.NET Web API or SignalR applications in Windows Service and other interesting scenarios.

OWIN works with a concept of middlewares. You can register any number of middlewares in the pipeline. Basically, middlewares are just functions with two parameters – request context and next middleware. The middleware can look at the request context and process the HTTP request, or pass the request to another middleware (and optionally do something with the response produced by the next middleware).

This mechanism is very flexible and allows to combine multiple frameworks in one application. The authentication also benefits from this concept and can be totally independent on the application itself.

For example, you can register Web API in the OWIN pipeline. When the request URL matches some API controller, Web API will process the request and produce the response. If Web API doesn’t recognize the URL, it will pass the request to the next middleware in the pipeline, which can be e. g. static files middleware. It will look in the filesystem and return the appropriate file, or again, pass the control to the next middleware in the pipeline. If no middleware wants to process the request, a HTTP error will be produced by OWIN.

OWIN Security is a set of middlewares which handle the authentication. These middlewares are typically registered at the beginning of the pipeline.

When a HTTP request is received, the authentication middleware will make sure the request is authenticated (by looking at cookies, the Authorization header or something like that), set the user identity in the request context and pass the request to the next middleware.

The application will be able to read the information about the user and doesn’t need to know whether the user was logged through an authentication cookie or through Windows Authentication.

If the application notices that the user doesn’t have permissions to perform some operation, it may throw an exception, or set the HTTP status code to 401. The authentication middleware will notice that and can take an appropriate action, for example redirect the user to the login page.

OWIN can be integrated with ASP.NET quite easily thanks to the Microsoft.Owin.Host.SystemWeb package. If you install it in the application, the OWIN pipeline will be added before the ASP.NET stack, so you can use authentication middlewares in combination with Web Forms pages (or MVC controllers). And that’s exactly what I needed in my app recently to be able to use OWIN Security libraries, like Cookies, Facebook, Twitter and so on.

In the demo app, I am using Microsoft.Owin.Security.Cookies package as a replacement of Forms Authentication, because it works in a very similar way – it generates and stores the authentication ticket in a cookie.

1. Install Microsoft.Owin.Host.SystemWeb package in the application.

2. Then, install Microsoft.Owin.Security.Cookies package.

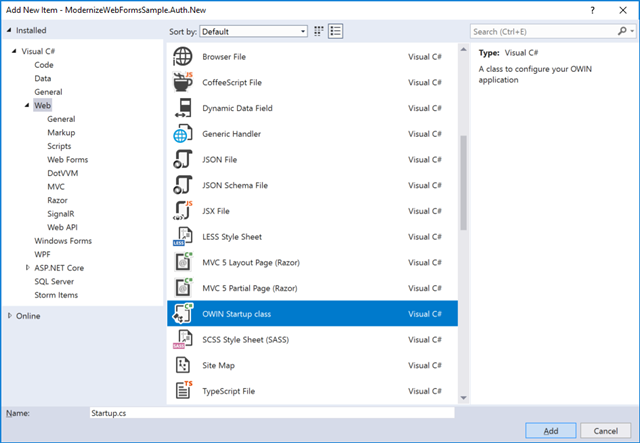

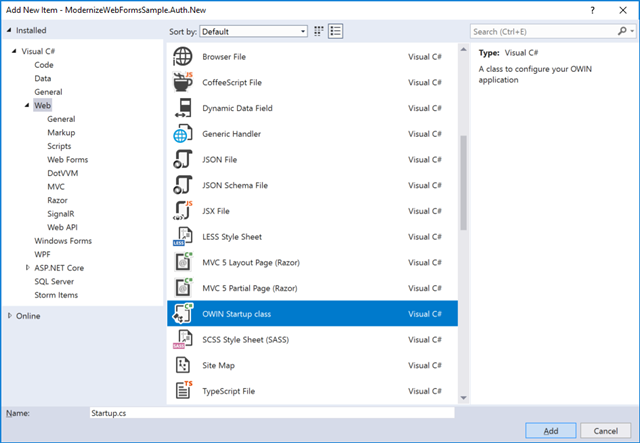

3. Add new OWIN Startup class in the project. This class is used to configure OWIN pipeline.

4. Add the following code snippet in the Configuration method in Startup.cs file. It will register the cookie authentication middleware in the OWIN pipeline.

If you need to adjust the default values like the expiration timeout or cookie domain, you can do it right here in the CookieAuthenticationOptions initializer.

app.UseCookieAuthentication(new CookieAuthenticationOptions()

{

LoginPath = new PathString("/Login.aspx")

});

5. To issue an authentication cookie, call Context.GetOwinContext().Authentication.SignIn instead of FormsAuthentication.SetAuthCookie.

You will need to build a ClaimsIdentity which represents the current user. This identity contains a collection of claims – e.g. username, user ID, list of roles, e-mail and other information about the user. You can add any custom claims in the identity – they will be encrypted and stored in the authentication cookie.

If you are already using ASP.NET Identity, there is a method called CreateIdentity, which will build the ClaimsIdentity for you.

protected void LoginButton_OnClick(object sender, EventArgs e)

{

if (Membership.ValidateUser(UserName.Text, Password.Text))

{

// get user info

var user = Membership.GetUser(UserName.Text);

// build a list of claims

var claims = new List<Claim>();

claims.Add(new Claim(ClaimTypes.Name, user.UserName));

claims.Add(new Claim(ClaimTypes.NameIdentifier, user.ProviderUserKey.ToString()));

if (Roles.Enabled)

{

foreach (var role in Roles.GetRolesForUser(user.UserName))

{

claims.Add(new Claim(ClaimTypes.Role, role));

}

}

// create the identity

var identity = new ClaimsIdentity(claims, CookieAuthenticationDefaults.AuthenticationType);

Context.GetOwinContext().Authentication.SignIn(new AuthenticationProperties()

{

IsPersistent = RememberMe.Checked

},

identity);

Response.Redirect("~/");

}

else

{

FailureText.Text = "The credentials are not valid!";

}

}

To sign out, you need to call SignOut method:

protected void LogoutButton_OnClick(object sender, EventArgs e)

{

Context.GetOwinContext().Authentication.SignOut();

Response.Redirect("~/");

}

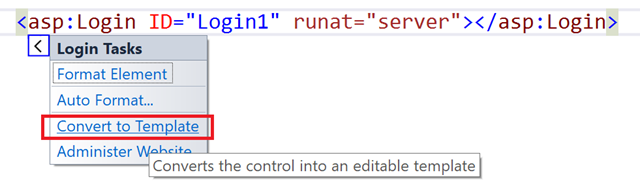

6. If you are using ASP.NET login controls, you may need to replace them with your own mechanisms as the controls are hard-coded to use the Forms Authentication. Unfortunately, there are no extensibility points to replace static method calls to FormsAuthentication.SetAuthCookie.

This applies to the following controls:

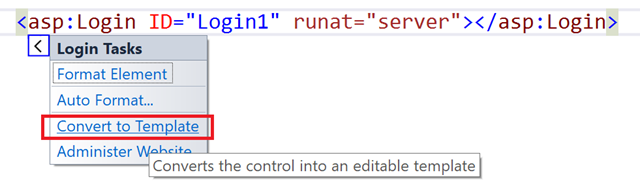

If you are using these controls without specifying your own templates for them, you may use the following quick action to generate their code.

You can now get rid of the Login control and use only the code inside the template. Just add OnClick to the button and use the code from step 5 to perform the authentication.

7. The last thing you need to do is to disable Forms authentication in web.config. Change the authentication mode to None. Don’t worry, there is still the OWIN authentication.

<authentication mode="None" />

Note that the URL authorization mechanism (system.web/authorization element) will still work. OWIN still sets the ClaimIdentity in the HttpContext.Current.User property, so the URL authorization module will be able to see whether the user is authenticated and in which roles it is.

You can see the working demo on GitHub in the ModernizingWebFormsSample.Auth.New project.